Faith-based Futurism

Do you believe in digital deities?

Priests of the Cyborg Theocracy speak of “superhuman” intelligence so often, it’s almost banal. Every new gadget appears to them as a prophecy fulfilled. It’s as if artificial intelligence will be the second coming of Christ, although I doubt these guys believe in the first coming.

Last week OpenAI CEO Sam Altman speculated the advent of godlike AI “may turn out to be the most consequential fact about all of history so far.” He went on to predict, “It is possible that we will have superintelligence in a few thousand days!”

Is that in ten years? Twenty? Talk about hedging your bet.

Altman wrote this in a September 23 blog post entitled “The Intelligence Age.” The title brings to mind spies who comb through a target’s private life, looking for psychological pressure points and compromising material—an age of intelligent agents and casual surveillance. In tune with that vibe, OpenAI now has a former NSA chief on its board.

You also have an OpenAI collaborator, the new CEO of Microsoft AI, Mustafa Suleyman, depicting digital agents as something akin to guardian angels, or maybe more like old school human intel agents. “Your AI should remember everything about you,” Sulyman recently said of the Copilot app, “all your contacts, all your personal data—everything you’ve said—and be there to support you...throughout your life.”

Many talk this way, including Altman, who envisions AI as a “super-competent colleague that knows absolutely everything about my whole life, every email, every conversation I’ve ever had, but doesn’t feel like an extension.”

Like the theocrats of old, these priests want to populate the world with invisible spies—not just in your imagination, but on all of your devices.

Meanwhile, the AI race points toward an increasingly militarized Future™. Microsoft is working to revive the nuclear reactor on Three Mile Island in Pennsylvania to power its massive data centers. They’re following Amazon’s lead, and others are sure to join. Nuclear-powered AI is shaping up to be a new normal.

Earlier this year, former Google exec Eric Schmidt described the techno-military hellscape this could soon evolve into. "Eventually, in both the US and China, I suspect there will be a small number of extremely powerful computers with the capability for autonomous invention that will exceed what we want to give either to our own citizens without permission or to our competitors," Schmidt told Noema magazine.

In other words, the largest AI systems will be so intelligent, so powerful, they’ll need to be guarded like temple idols, lest the unwashed make contact with them. “They will be housed in an army base, powered by some nuclear power source and surrounded by barbed wire and machine guns.”

This unholy spirit is in the air. It seems like anybody who’s anybody feels its presence.

On September 24, the day after Altman posted “The Intelligence Age,” Klaus Schwab published an essay at the World Economic Forum site with the same theme. Where Altman looked to the future, though, Schwab focused on the ongoing transformation taking place under our noses. “The Intelligent Age—driven by rapid advancements in artificial intelligence, quantum computing, and blockchain—is transforming everything and changing it right now, in real time.”

As usual, Schwab calls this an opportunity for greater “global cooperation.” In fact, this will be the theme of the upcoming WEF meeting in Davos. It’s as if digital spirits have been unleashed upon the planet and are now working their magic on the human inhabitants. Even Davos Man is starting to worry whether he’ll get a big enough piece of the action.

To my surprise, the next day—September 25—Ivanka Trump recommended a futurist essay that’s been making the rounds lately. It was hammered out by a whiz kid who recently left OpenAI to independently pursue his goal of steering godlike AI in a positive direction. The objective is to guide the Machine toward human alignment—or rather, toward “superalignment,” which probably pays better.

“Leopold Aschenbrenner’s ‘Situational Awareness’ predicts we are on course for Artificial General Intelligence (AGI) by 2027,” Ivanka wrote, “followed by superintelligence shortly thereafter, posing transformative opportunities and risks. This is an excellent and important read.”

It truly is a significant paper, but not necessarily for its technical rigor. Aschenbrenner certainly lays out his case well enough. Like many a pop futurist, he charts the recent advances in AI capabilities. He then projects these trends onto an exponential curve into the future. Just look at the line going up and up:

Before we know it, we would have superintelligence on our hands—AI systems vastly smarter than humans, capable of novel, creative, complicated behavior we couldn’t even begin to understand—perhaps even a small civilization of billions of them. Their power would be vast, too. … [S]oon they’d solve robotics, make dramatic leaps across other fields of science and technology within years, and an industrial explosion would follow.

Superintelligence would likely provide a decisive military advantage, and unfold untold powers of destruction. We will be faced with one of the most intense and volatile moments of human history.

Therefore, we need to figure out how to either keep these digital gods under control, or at the very least, to make sure they’re merciful toward our side.

I’m reminded of the 90s, when the internet was set to become the next big thing. At the same time, fire-breathing preachers warned of the coming Antichrist, while Hollywood made films about alien invasions and genocidal robots.

A decade or so later, the internet was a massive profit engine doubling as a surveillance apparatus. The alien invasion actually arrived in the form of black monoliths held in human palms, each one driving our cultural evolution. Skynet may not have gained sentience, but then again, neither did most screen-glued humans.

The rise of artificial general intelligence, or artificial superintelligence, will likely be quite similar. Complex algorithms will indeed be “godlike”—with a little “g”—in the sense that they’ll generate new types of information that humans couldn’t attain on their own. This is already happening, from biology and medicine to private data mining.

As AI becomes a primary source of information—indeed, as it becomes the highest authority on many subjects—people will supplicate to it, defer to its decrees, and elevate its priests. First, they will call AI a “tool,” then a “teacher,” then a “friend” or even a “lover,” and in the end, many will call it “God.” But as with all technologies, the hype will outstrip the reality. It’ll probably be annoying and outright destructive in ways no one could anticipate.

I view these futurist prophecies sort of like an indecisive atheist looks at a megachurch, or an ambivalent marxist sees a megacorporation. In essence, I’m intensely skeptical about the sincerity and good will of the Cyborg Theocracy and its priests. But that doesn’t mean I’m not concerned about their rising techno-military power structure. Perhaps most of all, I’m bothered by their power to brainwash my fellow humans at scale.

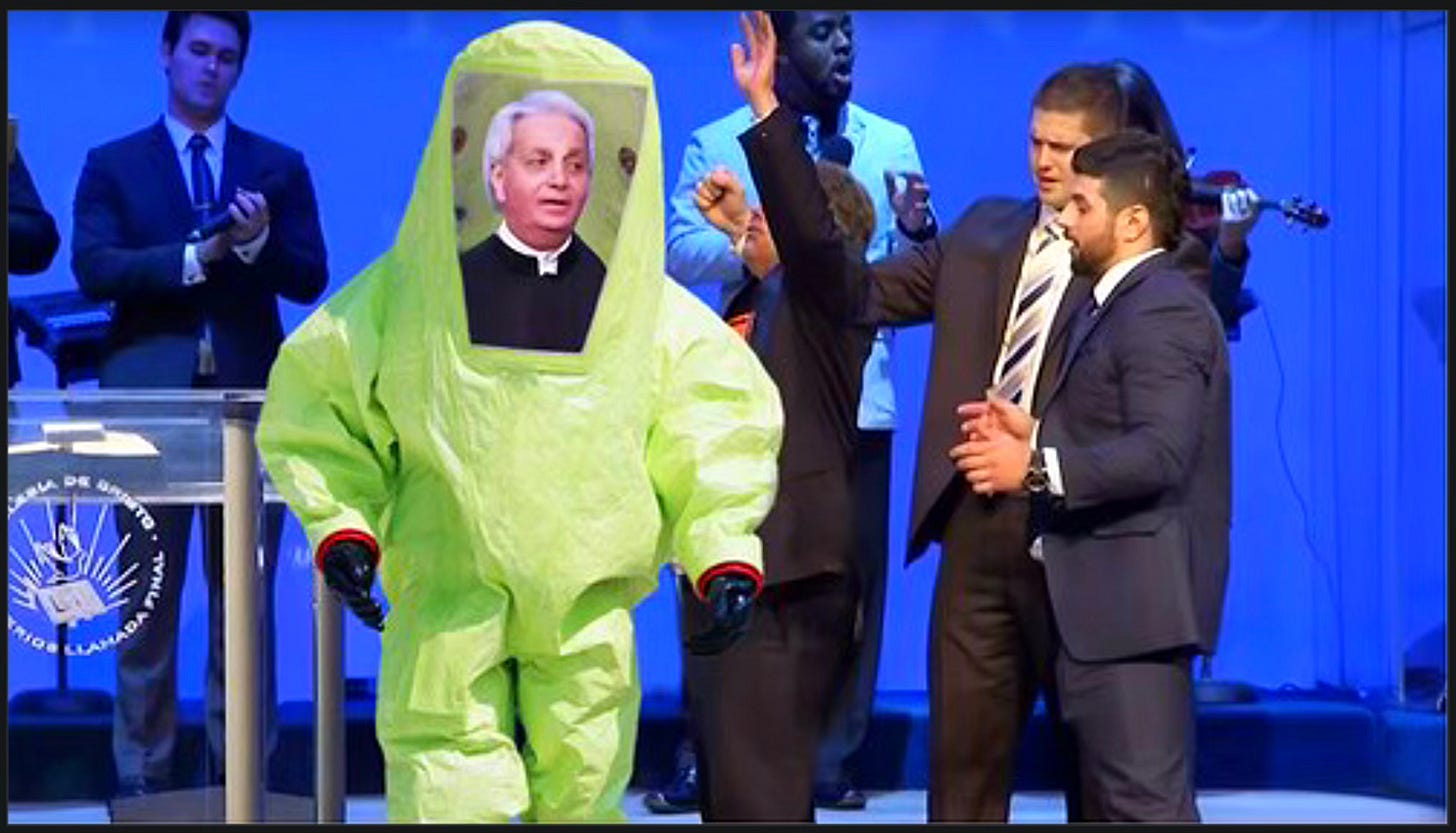

Try to think of it like an atheist would perceive a high profile cult. Even if a charlatan’s god doesn’t really exist, the charlatan can still build a massive temple. He can rake in millions from a gullible congregation. If he’s clever, he can even engineer phony “miracles” to keep his flock enthusiastic.

Is this how artificial “godlike” intelligence will end up? Just a lot of hype, a lot of suckers, and a lot of money funneled upward? Will Big Tech be like a new Trinity Broadcasting Network, deploying billions of personalized Benny Hinns to heal the world? Given the long history of failed prophecies, it’s easy to imagine such a disappointing outcome.

Then again, what if the prophecies of the Cyborg Theocracy do come to pass? Or what happens if they’re realized just enough to keep the enterprise going? Anything is possible, so I don’t dismiss these extraordinary ideas out of hand.

Imagine one or many superintelligent entities emerging from nuclear-powered data centers. Imagine an algo-god incarnating into a global digital system of surveillance sensors, hypnotic screens, humanoid robots, and swarms of death drones. Imagine that this digital Beast views us humans, not as a parent species, but rather as biofuel.

That’s all too easy to imagine, as we can readily observe in decades of science fiction. Today, it’s being presented as an imminent reality.

Of course, back in the late 90s, it was also easy for the Heaven’s Gate cult to imagine benevolent aliens in an invisible space ship. They were certain these superhuman beings were out there, hovering behind Hale-Bopp comet, eager to do us favors. In fact, the Heaven’s Gate cultists were so convinced that superintelligent ETs were real, they were willing to cut their own balls off and drink suicide vodka in order to meet them.

As we stand on the cusp of “The Intelligence Age,” it’ll be important to keep your eyes peeled and keep an open mind. Many things are possible, from smart ass computers to alien overlords. Even so, never discount the possibility that you’re getting played by futurist salesmen—or their counterparts, the dystopian bullshitters. Some of these people are so cynical, they’d have you cut your own balls off for nothing.

NOTE: The Omega Point Podcast will resume this week—as long as Substack has finally fixed the email issue. And if my recording worked out, the next episode will be my barn-burner in Dallas.

SIGNED COPIES OF DARK ÆON ARE NOW AVAILABLE

Purchase yours at → DarkAeon.xyz ←

More great insight into human behavior, Jose. Protect -- and celebrate -- your balls.

Oh hey, I just got your book in for my library.